Reduce Your Costs by 30% When Using GPT-3 for Python Code

Large language models (LLMs) like ChatGPT and GPT-3 excel at analyzing both natural languages, such as English, and programming languages, such as Python. This dual ability makes LLMs very useful for day-to-day work, as they accept, process, and produce both text and code. In this blog, we present simple and efficient methods for reducing the number of tokens required by GPT-3 to represent a typical Python code. All the proposed methods are available on GitHub.

Introduction – Code Tokenization

LLMs like GPT-3 employ a tokenizer – a pre-processing component that divides a text into words or sub-words, which are then converted to tokens via a look-up table. For example, the sentence “My favorite color is indigo.” is converted by a GPT-3 tokenizer to seven tokens:

Figure 1 : Tokens of natural language. Example generated from here.

As shown in Figure 1, the tokenizer replaced the first four words (with whitespace before) by a dedicated token. The word ‘indigo’ was split into two tokens due to sub-word tokenization. The dot character in the end was also converted to a token.

An LLM can only accept a limited number of input tokens. Furthermore, for commercial LLMs such as GPT-3, the pricing is done per input (and output) tokens. GPT-3 Davinci model, for example, costs 0.02$ per 1000 tokens. As a result, reducing the number of input tokens for an LLM translates to cost reduction.

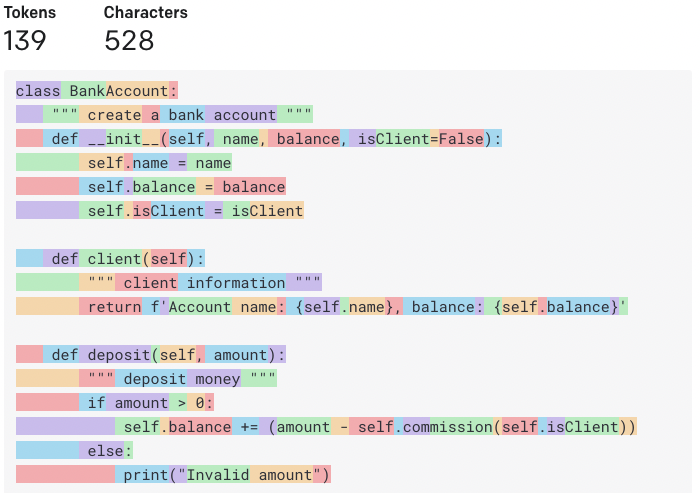

When converting natural language text to tokens, we have little flexibility or control over the tokenization process. When converting Python code to tokens, the situation is different. Let’s consider an example. Figure 2 illustrates the tokenization results of a simple Python class. The class has 528 characters in total, which are converted into 215 tokens. Examining the tokenization process reveals that each space at the start of a Python line is converted to a different token (!). This type of conversion is highly inefficient for indent-based language, like Python.

Figure 2: Tokens of simple Python class. Example generated from here

Case Study – Code Tabification

Luckily, there’s something we can do to reduce the number of tokens – Code Tabification. With Code Tabification, we simply replace groups of spaces by tabs. If we convert spaces at the beginning of a python code by tab characters (‘ ‘ -> ‘\t’), we can significantly reduce the number of code tokens, while fully preserving the code’s functionality and readability. Figure 3 depicts the class tokens after Code Tabification.

Figure 3: Tokens of a simple Python class after Code Tabification. Example generated from here

We see from Figure 3 that Code Tabification reduced the number of tokens by 30% (151/215). Since Python is an indent-based language, Code Tabification will have a similar effect on almost any piece of Python code.

Since tab-indented Python code is perfectly legal and fairly common, the LLM’s performance should not be degraded. In fact, it might even be enhanced by the shorter and more concise token representation.

Additional Token-Minimization Techniques

Code Tabification is easy to implement, highly effective, and preserves the code functionality and readability. Additional token-minimization techniques that preserve the code functionality, and don’t significantly affect the readability, include:

- Combining imports (‘import a; import b’ -> ‘import a,b’)

- Combining newline characters (‘\n\n’->’\n’)

- Removing trailing spaces at the end of lines (‘\n’->’\n’)

Note that there are other functionality-preserving methods for compressing a Python code, like removing type hints, removing comments, removing pass statements, renaming local variables, eliminating spaces and newlines, and more. However, these transformations reduce the code readability, and may impair the LLM’s ability to understand and process the code.

What About Code-Oriented Models?

In addition to general LLMs such as ChatGPT and GPT-3, there are also code-oriented models like Codex. Code-oriented models were trained specifically for code, and their tokenizers are also tailored for code processing.

Figure 4 shows an example for tokenization of a code-oriented model. As can be seen, the code-oriented tokenizer automatically converted 4-spaces to a single token. Hence, code-oriented models don’t require the Code Tabification operation. Other optimizations, such as newline compression, were also performed automatically by the Codex tokenizer.

Figure 4: Tokens of a simple Python class with code-oriented tokenizer (Codex). Example generated from here

However, code-oriented models are best suited for plain code generation. For tasks where the input or output is a combination of text and code, such as explaining a piece of code or generating code in response to a complicated natural language prompt, general LLMs like ChatGPT and GPT-3 may perform better. Furthermore, code-oriented models are usually slower, and more expensive.

Conclusion

In this blog post, we proposed efficient and simple techniques for reducing the number of GPT-3 tokens for Python code. We placed special emphasis on techniques that preserve both functionality and readability of the code. All the proposed methods are available on GitHub.