Big Data Testing

Businesses around the world collect massive amounts of data from various sources. But, not all pieces of data are useful. That’s where Big Data Testing comes into play.

Big data testing checks the functionality of data applications, ensuring that they work correctly and provide accurate insights. Additionally, the larger and more varied data involved makes it more complex than traditional database testing.

So, let’s discuss the key strategies for big data testing, its benefits and challenges, and best practices for ensuring the successful testing and use of big data applications.

What is Big Data Testing?

Big data testing is a process of a review of the functionality of big data applications before validation.

It encompasses checking the validity of the big data applications’ data processing apparatus, ingestion systems, storage tools, and analytics tools to confirm that all perform validity, completeness, and consistency checks.

Big data testing uses numerous strategies to introduce automatic testing approaches to the procedures, tools, and technologies. The major role of big data testing is to make sure a big data application delivers what the business needs and offers the right data to the manager.

Strategies of Big Data Testing

Big data testing requires specialized strategies due to the volume, variety, velocity, and veracity of the data involved. Here are some key strategies:

1. Data ingestion testing

Make sure that data from different origins are properly integrated into the big data system. Assess the data ingestion process’s effectiveness based on data elements and points, as well as response time. Some tools that can be used include Apache Kafka, Apache NiFi, and Talend.

2. Data quality testing

Ensure data integrity, verification of data, and truthfulness of data collected for analysis using data profiling, validation, and cleansing practices. Some tools for this include Informatica, Talend, and IBM InfoSphere.

3. ETL (extract, transform, load) testing

Check and verify whether data is extracted correctly from source systems, transformed as per the required business logic, and loaded into the target systems. Test data transformation rules, data load time, and data integrity. Tools like Apache Hive, Apache Pig, and SQL queries are useful.

4. Performance testing

Evaluate big data applications based on their performance in different environments. Perform load, stress, and scalability testing to verify the system’s capability to accept large volumes of data. This can be performed using JMeter, Gatling, and BlazeMeter.

5. Security testing

Ensure data privacy and protection against unauthorized access. Test for vulnerabilities, data encryption, and access control mechanisms. Tools like Apache Ranger, Cloudera Navigator, and IBM Guardium can be employed.

6. Data processing testing

Validate the accuracy and efficiency of data processing frameworks. Ensure that data processing jobs have been performed well as planned and in the most effective manner. Applications like Apache Spark, Apache Flink, and Hadoop can be used.

7. Regression testing

Ensure new changes do not negatively affect existing functionalities. Re-run previously conducted tests to check if existing functionalities still work correctly after changes. Automation tools like Selenium, QTP, and Apache JMeter are beneficial.

Benefits of Big Data Testing

Big data testing has a number of benefits that enable organizations to accurately determine the value of the data they handle. Businesses can derive meaningful insights and make informed decisions by ensuring data accuracy, completeness, and consistency. Here are some key benefits of big data testing:

- Improved data quality: Big data testing makes it easy to detect and sort out any issue with big data before it is used.

- Faster time-to-market: Speeds up application delivery by catching issues early, preventing major problems.

- Reduced costs: Lowers costs by identifying and fixing problems early in development.

- Improved customer experience: Ensures applications meet customer needs from the start by resolving issues early.

Challenges in Big Data Testing

Big data testing is complex and comes with unique challenges. Tackling these is crucial for the success and performance of big data applications. Here are the main challenges:

- Scalability: Testing is tough because these applications handle huge amounts of data.

- Complexity: These systems have many components, making testing complicated.

- Performance: Ensuring high performance with large data volumes is challenging.

- Data variety: Different data sources add to the difficulty of testing.

Big Data Testing vs. Traditional Database Testing

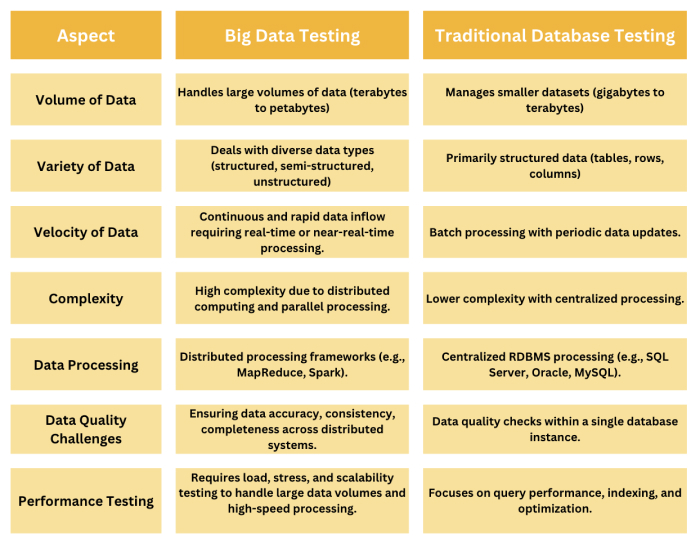

Big data testing and traditional database testing both aim to ensure the quality and reliability of data systems. However, they differ significantly in their approach, tools, and challenges due to the scale and complexity of the data involved.

Best Practices for Successful Big Data Testing

To effectively test big data applications, it’s important to follow best practices that address their complexity and scalability. Here are some key practices:

- Develop a testing strategy: Create a strategy that takes into account the complexity and scalability of big data applications.

- Use specialized tools: Utilize tools specifically designed for handling the unique challenges of big data applications.

- Automate testing: Implement automated testing to save time and reduce costs.

- Involve stakeholders: Engage stakeholders in the testing process to ensure the applications meet customer needs.

- Validate data quality: Regularly check data for accuracy, completeness, and consistency. Clean up any errors to maintain high-quality data.

- Perform scalability testing: Test how the application performs with increased data loads to ensure it scales effectively.

- Monitor and log performance: Track performance metrics and resource usage to quickly identify and resolve any issues.

Concluding Thoughts

Big data testing is an essential component of the big data system, and testing big data applications is necessary to check whether they are working properly.

It is vital to mention that the management of big data has discrete characteristics as a result of the immense dataset size and nature, and it is possible to define special tools and methods that can help minimize the threats associated with big data utilization.

If big data testing is done correctly, it will lead to better data quality, faster product creation, and lower costs for the client.

Thank you for reading.