Code Review

One of the most fundamental steps in the software development lifecycle, is code review. Why? Because it helps catch bugs or issues that could cause problems in production. It takes both knowledge and experience to spot the kinds of issues that might not be obvious now but could blindside you, and lead to real trouble later on. Many developers, especially junior ones, find this step challenging. As it’s a repetitive and detail-oriented process requiring careful attention before one can ship code to the main codebase.

As a junior developer, I used to skip writing comments because I thought, “The code is self-explanatory.” Weeks later, a teammate asked about a critical function-and I had no idea what it did anymore. That moment clicked: code review isn’t just for the present. It’s about writing for the future: for your team, your future self, and anyone who’ll read your code later.

The purpose of code review isn’t about pointing fingers-it’s about protecting the product, the people, and the process. A single missed bug can cause major issues or even security vulnerabilities. That’s why doing it well matters-and why the benefits of code reviews are so important.

The good news? AI might be able to help. According to the 2024 Stack Overflow Developer Survey, 81% of developers say AI boosts productivity. In this article, we’ll explore what makes code reviews effective-from everyday habits to evolving tools-and how AI is becoming a powerful part of that journey.

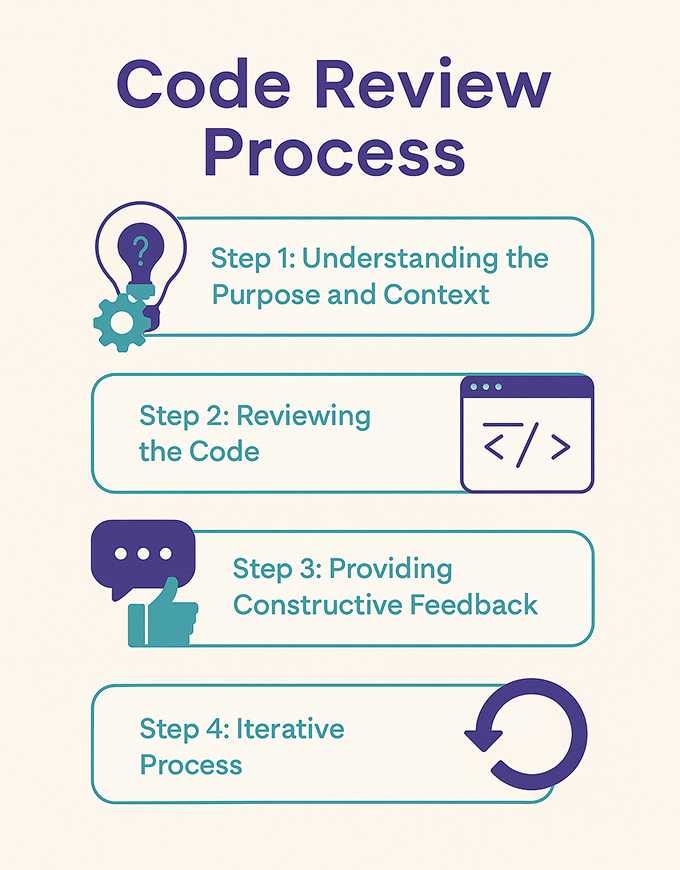

Code Review Process

This process isn’t just a technical code review checklist – it’s more like an ongoing conversation about the code we write. It has its own rhythm, shaped by collaboration, learning, and shared goals.

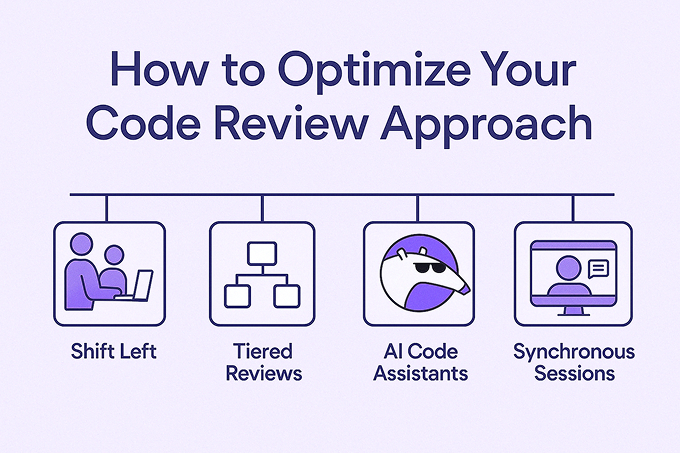

Let’s walk through the core parts of that process together. The diagram below outlines four key rules that can help make code reviews smoother and more productive.

Step 1: Understanding the Purpose and Context

Being a code reviewer the first task should be to understand the purpose of the code. It is really important for the reviewers to understand the use cases the code is addressing. It’s like laying the foundation of a construction site – everything else builds on this understanding.

Let’s take an example of a method that processes user payment data. First things first, what to do with the user data should be your question, like whether in that particular method we are validating the input formats or it is used to calculate the total amount and apply discounts.

Step 2: Reviewing the Code

Once the reviewer has understood the main functionality and context of the code, the actual review begins. The reviewer’s role is not just to point out bugs or comment on whether the updated code looks fine, but to think critically about whether the code will continue to work well in the future.

For instance, after we have defined the purpose of the method which was used to calculate the total amount of payments, the code reviewer has to make sure that if in future any new use case has to be added, for example we want to calculate the total discounts applied, the code will not break down and can easily be updated.

Step 3: Providing Constructive Feedback

With the review complete, providing constructive feedback can go a long way. Good feedback doesn’t just highlight issues – it explains why they matter and offers suggestions for improvement.

Now that in our example we have found out that the method used to calculate the total amount of payments is not optimized to support future use cases which may be added, we can provide constructive feedback like this: ‘Well, the code looks fine, but how about we extract the calculation logic into a separate utility function so that in the future, if we want to add functionality-for example, applying discounts on the total amount-we can easily update our code.’ This is a great example of how constructive feedback might look.

Step 4: Iterative Process

Finally, code review isn’t a one-time event; it’s an iterative process. Developers address the feedback, make changes, and may go through multiple review cycles before the code is approved and merged.

Now that we have understood the code review process we can now move onto the best practices for an effective code review.

Code review Best Practices

What to Focus on During a Code Review

When you’re working with a pull request and probably wondering where to begin, focus on these critical aspects that experienced reviewers prioritize:

- Logical Soundness: Does the solution actually solve the problem? As one reviewer put it: “Even if the code is technically correct, it might still be a suboptimal choice for the given context.” Don’t think that if the code runs it’s the most efficient solution.

- Future Scalability: Will this code hold up as the system grows? Some codes can work today but they can cause maintenance headaches in future. Therefore, great reviewers think beyond the immediate functionality and consider the long-term impact.

- Side Effects and Risk Management: Could this change affect other parts of the system? We need to be cautious of state changes that could have ripple effects or introduce single points of failure. Sometimes, the most dangerous bugs aren’t in what the code does directly, but in what it unintentionally impacts elsewhere.

- Testing Rigor: Are the tests an afterthought or genuinely valuable? Meaningful tests focus on verifying the behavior of the code under different conditions, rather than simply boosting coverage metrics. They should properly test the business logic, both from a unit test perspective and by providing reasonable evidence that the code works as expected in staging environments.

- Documentation Updates: Documentation often gets overlooked in fast-paced development, but it’s essential in the long run. Future developers (including your future self) will appreciate it if you keep the documentation up to date with code changes.

Strategies for Reviewing Code Efficiently

The most efficient reviewers have developed strategies that balance thoroughness with practicality:

- Start with readability and simplicity. Reviewer’s Code that’s difficult to understand is difficult to maintain. Clear, self-explanatory code reduces cognitive load for both current reviewers and future developers who’ll need to maintain it.

- Adjust your approach based on who wrote the code. Junior developers benefit from detailed explanations of the “why” behind suggestions, while senior developers might need more focus on architectural concerns. This personalized approach respects experience levels while ensuring quality.

- Let automation handle the basics. “Using automated tools for routine checks” saves human attention for what truly matters. When linters and static analysis tools catch formatting and simple issues, you can focus on the higher-order concerns like architecture and business logic.

- Consider the review in layers. Start with a high-level overview before diving into details. This way, you can catch larger architectural issues that might get missed if you jump straight into reviewing individual lines of code.

Common Mistakes to Avoid in Code Reviews

These are some common mistakes that even experienced developers can make. After reading through many online discussions where developers shared the errors they’ve run into during code reviews, I was able to identify these recurring issues:

- Excessive nitpicking: Nothing deflates a developer’s spirit faster than a barrage of comments about minor formatting issues. When style becomes the primary focus, it can discourage developers and undermine the credibility of the entire process. Keep feedback constructive and focus on the bigger picture.

- Allowing oversized PRs: If your comments outnumber the lines of code, it’s better to jump on a call. Large PRs often lead to shallow feedback, and a quick discussion can clarify the changes more effectively. So don’t be afraid to streamline the process by having a conversation.

- Manual verification of what could be automated: Your time and focus are valuable in code review. Don’t spend it on tasks automation can handle. Set up strong CI pipelines so you can dedicate your attention to the more complex issues that truly need your expertise.

- Inconsistent standards: Inconsistent standards can confuse developers and make reviews feel like a moving target. Make sure to document your team’s expectations and stick to them to build trust and clarity in the process.

How to Provide Feedback Developers Actually Appreciate

The way feedback is delivered dramatically impacts how it’s received. Be specific and actionable. Instead of vague criticisms like “This code is confusing,” offer concrete suggestions such as “Consider extracting this logic into a named function to clarify its purpose.” Clear guidance helps developers improve. Even if you’re accepting the code changes, but feel there are improvements to address-like adding clearer comments, don’t just write “LGTM.” Include thoughtful feedback to guide the developer toward better practices.

Clearly distinguish mandatory changes from suggestions. Many teams use labels like “Blocker,” “Nitpick,” or sometimes “For consideration” to indicate critical changes compared to optional suggestions, helping set proper expectations. Balance criticism with positive reinforcement. Good reviews are empathetic. As one developer said, “They recognize the effort behind the code.” Acknowledge clever solutions to create a positive, welcoming review culture. Frame feedback as questions when appropriate. “Have you considered handling this edge case?” often leads to better discussions, while “You forgot to handle this edge case” sounds more corrective.

When to Approve vs. When to Request Changes

Clear guidelines for when to approve code can really help keep the review process smooth. If the change does what it’s supposed to and keeps the codebase in good shape (or even improves it), it’s okay to approve-even if a few small things could be polished later. Don’t let the pursuit of perfection slow everyone down, especially in fast-moving systems. It’s about making steady improvements together.

Request changes when there are critical bugs, security risks, major performance issues, or if something doesn’t align with team standards. Also, if important tests are missing or there are architectural concerns that could lead to long-term challenges, it’s okay to ask for revisions.

Remember that the goal is continuous improvement, not perfection. Focus on code that’s better than what was there before, rather than expecting something flawless. Every codebase is a journey, and each step forward brings us closer to a stronger product.

The Iterative Nature of Code Reviews

Code reviews rarely wrap up after just one round of feedback. They’re naturally iterative, with changes and improvements based on reviewer comments, followed by a re-review of the updated code.

I remember when I was a junior developer in my team, and was adding a cool button to the screen for a new feature. I thought I had nailed it-great colors, smooth animations-but during the review, my senior pointed out that the button lacked accessibility support and didn’t align with our design system. After making the suggested changes, I submitted it for a re-review. The second round of feedback went much better, and my senior noticed how I had considered the suggestions seriously. This back-and-forth reinforced how important it is to iterate, refine, and be open to feedback, ultimately improving both the code and my development skills.

Managing this iterative process requires a balance. While multiple review cycles improve quality, they can also lead to delays and frustration if not managed well. Google’s research shows the benefit of keeping things moving-70% of changes are committed less than 24 hours after initial review. This quick turnaround shows how effective reviews can be when they’re part of a smooth, collaborative process instead of becoming obstacles.

As changes are made, reviewers should focus primarily on whether their original concerns have been addressed, rather than introducing new ones unless absolutely necessary. This prevents “review scope creep”-which is when new issues are added with each round of review, causing the process to drag on endlessly. It helps maintain momentum, allowing quality to improve without losing sight of progress.

Rethinking Traditional Code Review Practices

Is traditional code review a waste of time?

Some developers question whether traditional code review practices provide enough value to justify their cost. Critics point out several issues:

- Reviews often come too late in the development process, when changes are already expensive.

- The feedback loop is slow, sometimes taking days for changes to be approved.

- Reviewers frequently lack the context needed to give truly valuable feedback.

- Reviews can become mere rubber-stamping exercises as teams rush to ship.

These critiques don’t mean that code reviews are useless; they suggest that the process needs to evolve. The most effective teams now see code reviews as an ongoing part of a continuous feedback loop, rather than isolated events.

How to Optimize or Transform Your Code Review Approach

To evolve your software code review process for greater efficiency and effectiveness. Consider “shift-left” approaches by incorporating review elements earlier in the development process. For example, pair programming, where code is written and reviewed in real-time, helps catch issues as they arise, rather than after the code is completed.

Implement tiered review processes based on risk and complexity. Critical components get thorough reviews from multiple team members, while lower-risk changes undergo lighter reviews. AI code assistants can be used to catch issues before they reach the review stage. According to Stack Overflow’s 2024 survey, 82% of developers using AI tools employ them for writing code. These tools guide developers toward better solutions and provide immediate feedback to prevent common issues.

Supplement asynchronous reviews with synchronous sessions for complex changes. A 30-minute screen-sharing session can often accomplish more than days of comment exchanges. These real-time discussions build shared understanding and resolve ambiguities quickly.

AI in Modern Code Review Processes

AI-powered code review tools are transforming the way teams approach software reviews. These tools automate many aspects of the process, providing instant feedback and freeing up human reviewers to focus on higher-level concerns like architecture, design patterns, and maintainability.

One such tool is Qodo Merge, which I’ve personally used-and I was genuinely impressed by its intelligent AI code testing capabilities. It integrates seamlessly with GitHub pull requests and performs code reviews based on industry best practices we’ve come to rely on.

Let’s take a closer look at some of the standout features Qodo Merge offers:

- Automatically generates pull request descriptions and guides reviewers through the code changes

- Surfaces and prioritizes potential bugs and issues

- Ensures that the code complies with ticket or task requirements

- Applies consistent review standards across the entire team

These AI tools have truly proven themselves to be a blessing for developers during the code review cycle, saving time, reducing manual effort, and improving the overall code quality.

One of my friends built a startup and had no idea how to review his project’s code. Since he was on a tight budget, he couldn’t afford to hire a separate code reviewer. So, I suggested he use Qodo as an AI code reviewer. Just a week later, he told me that their work efficiency had increased by almost 70% simply by using it, and he’s now expecting even more from the tool – all with just a $15 subscription for the PR agent capabilities.

Creating a Culture of Effective Code Review

Beyond all the tools and processes, the heart of a successful code review is culture and we can include this in our code review definition. The most effective teams don’t treat reviews like gatekeeping rituals-they treat them as opportunities to grow together and make the code stronger as a team.

As one developer put it, “Better code reviews are also empathetic. They know that the person writing the code spent a lot of time and effort on this change. These code reviews are kind and unassuming. They applaud nice solutions and are all-round positive.”

That doesn’t mean we lower our standards. It just means we recognize that code review is a human process, not just a technical one. When we focus on the code rather than the coder, we create a space where people feel safe sharing their work and are open to improving it.

The goal isn’t perfect code-it’s steady, thoughtful progress. It’s about improvement in the code, yes, but also growth within ourselves as developers. If your organization isn’t doing code reviews yet, start now. It’s a small step that can make everyone a better engineer.

So whether you’re setting up code reviews for the first time or just trying to make them better, remember: the best reviews are technically sound and kind. Approach each one with clarity and care, and you won’t just write better software-you’ll help build a healthier, more supportive team.

Conclusion

Code reviews are more than just technical checkpoints-they’re collaborative conversations that strengthen both code and teams. When done well, they catch bugs early, promote knowledge sharing, and build a culture of continuous improvement. The most effective reviews balance thoroughness with efficiency, focusing on logical soundness, future scalability, and constructive feedback rather than nitpicking.

As the development practices are evolving, so does our approach to code reviews. Whether through AI-assisted tools that handle routine checks, synchronous review sessions for complex changes, or simply fostering an environment where feedback is given with empathy, the goal remains the same: steady progress toward better code.

We have to remember that perfect code isn’t the objective-improvement is. By treating code reviews as opportunities for growth rather than gatekeeping exercises, we create stronger codebases and more capable developers. In the end, the best reviews aren’t just technically sound-they’re kind, specific, and focused on the code, not the coder.

Frequently Asked Questions

Is code review really necessary with continuous integration pipelines?

Absolutely! I used to think the same thing when I first worked with robust CI pipelines. “If the tests pass, why do we need another human to look at it?” But I quickly learned that while CI tools are great at catching syntax errors and test failures, they can’t evaluate whether your solution actually makes sense in the bigger picture. A machine won’t tell you when you’ve written something that’s technically correct but will be a nightmare to maintain six months from now. Code reviews bring that human insight-checking if your code aligns with business requirements, if your approach is maintainable, and often catching subtle logic issues that tests might miss.

Why do some developers consider code reviews a waste of time?

I’ve been in both camps on this one. When reviews become bottlenecks where PRs sit for days, or when they turn into debates about tabs versus spaces instead of actual issues, they definitely feel like a waste. I remember one team where reviews were just rubber-stamp exercises to check a process box-those were truly a waste of time. The skepticism usually comes from experiencing poorly implemented reviews: ones that happen too late, lack clear standards, or focus on the wrong things. But when done right-with automation handling the routine checks and humans focusing on what matters-they’re incredibly valuable.

How can teams make the code review process more efficient in Agile environments?

In my experience working with Agile teams, the code review process in Agile benefits alot from a “shift-left” approach-don’t wait until the end to review! Try pair programming for complex features, and keep those PRs small and focused (your reviewers will thank you). Let automated tools handle your style checks and basic validations so human reviewers can focus on logic and design decisions. Clear guidelines help too-I’ve seen teams waste hours debating the same points repeatedly until they finally documented their standards.

For those really complex changes, don’t just rely on asynchronous comments. A 30-minute screen-sharing session can accomplish what might otherwise take days of back-and-forth comments. And make sure you’re accounting for code reviews in your sprint planning-they’re part of development, not an afterthought!

What specific benefits does code review bring beyond just finding bugs?

Code reviews have shaped my career in ways I never expected. Yes, they catch bugs, but some of my biggest growth moments came when a senior developer questioned my approach and showed me a more elegant solution. That knowledge sharing is invaluable. Reviews also build a sense of shared ownership-I care more about code I’ve reviewed and feel more confident making changes later. They help preserve institutional memory too; I can’t count how many times review comments have explained WHY something was done a certain way, saving future developers from repeating mistakes.

What skills or qualifications make someone an effective code reviewer?

The best reviewers I’ve worked with combine technical expertise with strong communication skills. They understand architecture and security principles but can explain issues without making you feel inadequate. They focus on what matters-not nitpicking every variable name. They ask questions instead of making accusations: “That variable name is too long.” instead of “Consider whether a shorter variable name, like userData, could improve readability while still being descriptive.” And they suggest alternatives when identifying problems-showing they’re invested in finding a solution, not just pointing out flaws.

Which are currently considered the best code review tools by developers?

The best code review tools gaining real traction among developers right now are Qodo and GitHub Copilot-both AI-powered tools that bring intelligent automation into the review process, helping teams shift their focus from routine checks to higher-level thinking.

When performing code reviews, what critical issues should reviewers prioritize?

In my early days, I focused too much on small things like formatting and variable names, and ended up missing the bigger issues in the code. Now I know to focus first on the things that could actually cause problems, like:

- Security vulnerabilities that could expose user data

- Performance issues that might slow down the application

- Architectural decisions that affect long-term maintainability

- Proper error handling, especially in edge cases.

These high-impact issues pose the greatest risk if they’re missed early. I once overlooked a subtle input validation bug during a review, and it made it to production-teaching me to always prioritize these critical areas first.

How does regular code review enhance overall code reliability?

I’ve seen firsthand how teams that commit to regular reviews end up with more reliable codebases. It’s partly because they establish consistent patterns for error handling and ensure comprehensive test coverage. But it’s also because multiple sets of eyes simply catch more edge cases than one person working alone. On my current team, reviews have helped us reduce our production incidents by nearly 40% year-over-year, largely because potential issues get identified before the code ever leaves the development environment.

What are some practical tips for conducting more effective and less time-consuming code reviews?

The biggest game-changer for me was learning to review code in small batches rather than trying to process hundreds of lines at once. I also keep a mental checklist of critical items to look for, and I set a time limit for initial passes-if I can’t understand the changes within 30 minutes, that’s valuable feedback in itself! For simple issues, asynchronous comments work fine, but for complex ones, I’ll schedule a quick call. And of course, leverage those AI tools to handle routine checks-they’ve saved me countless hours of checking formatting and basic patterns.

How detailed should a code reviewer be when providing feedback?

It depends on the context! When I’m reviewing code from junior developers on my team, I take the time to explain not just what should change but why it matters. Those teaching moments are incredibly valuable. For routine changes from experienced team members, I might focus more on architecture and approach rather than implementation details. The key is making feedback specific enough to be useful. Instead of saying “this is hard to follow,” you could say, “consider adding a comment here to explain the reasoning behind this condition-it’s not immediately obvious why it’s needed.” This gives clear direction.

What should junior developers specifically look for during a code review?

When I was a junior developer, code reviews felt intimidating-like I wouldn’t have anything valuable to contribute. But I found I could learn a ton by examining how new changes fit into the larger system and identifying patterns used by senior developers. Focus on fundamentals like proper error handling, input validation, and test coverage. And don’t underestimate the value of checking readability and documentation-sometimes fresh eyes see clarity issues that more experienced developers miss!

What goals should software teams set to measure the success of code reviews?

On my team, we look at both numbers and quality improvements. We track defect reduction in production (the ultimate goal!), how knowledge is spreading across the team (measured through code ownership patterns), and practical metrics like review time and rework needed after reviews. But some of the most important indicators come from team satisfaction-are people finding value in the process or seeing it as a burden? It’s about balancing quantitative metrics with the qualitative improvement of code quality over time.

Are automated code review tools sufficient to ensure high-quality software?

I wish it were that simple! Automated tools have transformed our workflow by handling routine checks, but they’re just one piece of the puzzle. They can’t fully evaluate design decisions or business logic correctness-those still need human judgment. I remember a time when our automated checks gave a clean bill of health to a perfectly formatted, syntactically correct piece of code that completely misunderstood the business requirement. The most effective approach combines automated scanning with human review focused on those higher-level concerns.

Can outsourcing code review as a service improve code quality, and when is it beneficial?

In certain scenarios, absolutely. I worked with a team that brought in specialized security reviewers for a particularly sensitive project, and they caught several issues our team might have missed. Outside reviews are especially helpful when you need specific expertise, face bandwidth constraints, or want an unbiased assessment. They’re particularly valuable for security-critical applications or specialized domains. However, they work best as a complement to internal reviews-external reviewers often lack crucial context about your business requirements and system history.

How do you balance thoroughness and efficiency in the code review process?

This is the eternal challenge! I’ve found success with a tiered approach-adjusting review depth based on risk and complexity. Critical security components get a thorough review, while routine changes might get a lighter touch. Automating the repetitive aspects helps too. I’ve learned to focus on high-impact issues rather than pursuing perfection, and to timebox review sessions to avoid diminishing returns. The goal isn’t perfect code-it’s steady, thoughtful progress that makes each version better than the last.

How do you review ‘vibe-coded’ PRs without killing the flow?

Vibe coding is like having an agent that has the autonomy to perform your tasks. Imagine that you are a game developer practicing vibe-coding while building a cool anteater game where the anteater shoots bugs and saves you from them. You have just added a new feature allowing the anteater to collect coins while killing bugs. Before submitting your pull request, you would probably like to test your code, and to do so, you use an AI agent like Qodo to generate unit tests for your new method.

Once the testing is complete, you’re ready to submit your ‘vibe-coded’ PR to the main codebase and want automated code review assistance. At this stage, you can utilize an AI agent such as Qodo merge to review your pull request. This agent integrates into your code review process and checks most code aspects according to your documentation, whether it’s style or interface consistency and will help you review vibe-coded PRs without killing the flow.